Proposed AI framework may entrench bias against minorities, groups caution

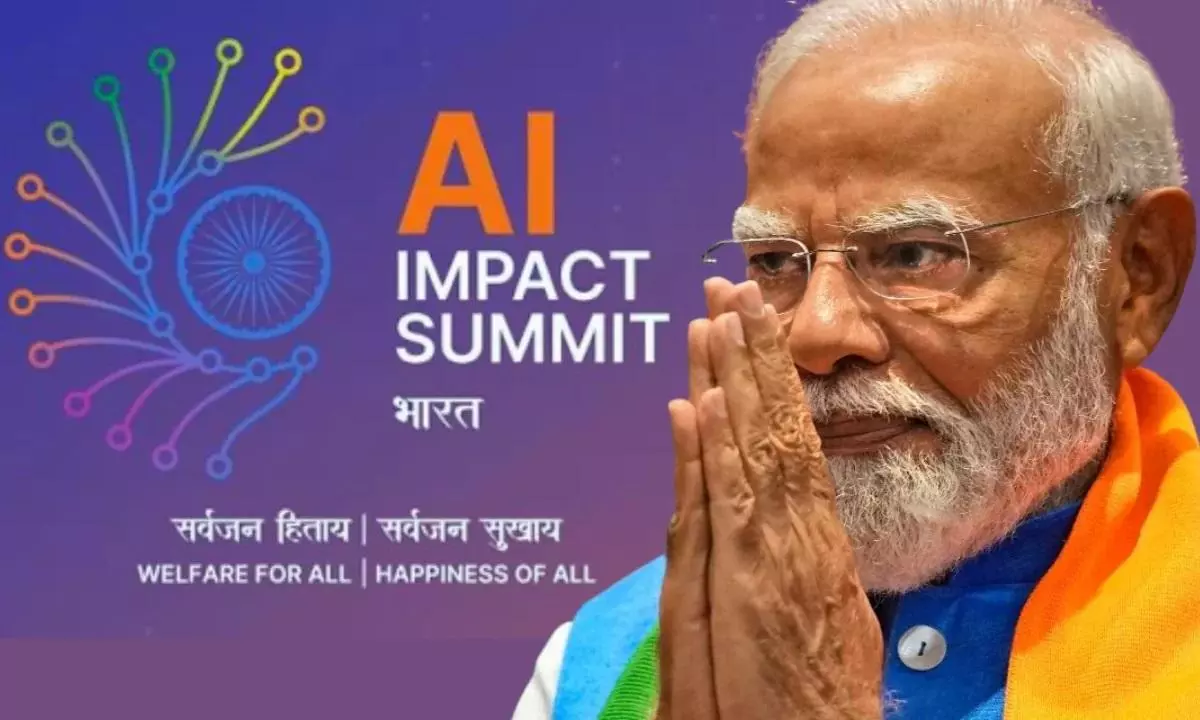

text_fieldsAs the AI Impact Summit 2026 is set to commence in New Delhi on Monday, a new report by the Center for the Study of Organized Hate and the Internet Freedom Foundation has warned that India’s proposed artificial intelligence governance framework, while projecting inclusivity and innovation, may disproportionately harm religious minorities, Dalit and Adivasi communities, and sexual and gender minorities in the absence of binding safeguards.

Titled AI Governance at the Edge of Democratic Backsliding, the report argues that the Union government’s preference for voluntary compliance and self-regulation over enforceable statutory protections risks entrenching structural inequalities, particularly because marginalised communities often lack the financial and institutional capacity to challenge opaque algorithmic systems in courts.

Although the Ministry of Electronics and Information Technology’s AI Governance Guidelines released in November 2025 reject what they describe as “compliance-heavy regulation” and assert that a standalone AI law is unnecessary at present, the report contends that reliance on existing statutes such as the Information Technology Act, 2000 and the Bharatiya Nyaya Sanhita, 2023 may prove inadequate in addressing emerging harms rooted in automated decision-making systems.

While the government’s summit vision of “Democratizing AI and Bridging the AI Divide,” anchored in the pillars of “People, Planet and Progress,” emphasises inclusive technological growth, the report maintains that the guidelines refer only broadly to “vulnerable groups” and fail to explicitly acknowledge the distinct risks faced by Muslims, Dalits, Adivasis, and LGBTQIA+ persons, thereby leaving critical gaps in targeted protections.

It further documents instances in which generative AI tools have amplified communal polarisation, including the circulation of AI-generated videos and images targeting Muslim communities and opposition leaders, some of which were shared by state units of the Bharatiya Janata Party before being deleted following public criticism.

Beyond online harms, the report raises concerns about the expanding deployment of AI in policing and surveillance, noting that predictive policing pilots in states such as Andhra Pradesh, Odisha, and Maharashtra may replicate entrenched biases embedded in historical crime data, while proposals to deploy linguistic AI tools to identify alleged undocumented migrants could invite discriminatory profiling.

The growing use of facial recognition technology in cities, including Delhi, Hyderabad, Bengaluru, and Lucknow, is also flagged as troubling, particularly because India lacks a dedicated legal framework comparable to the European Union’s risk-based AI regulation that restricts real-time biometric identification in public spaces.

The report further highlights exclusion risks in welfare delivery, observing that mandatory facial recognition authentication in schemes such as the Integrated Child Development Services may disproportionately affect poor and marginalised beneficiaries when technical failures occur.

While acknowledging India’s ambition to build sovereign AI capacity, the authors conclude that without binding transparency obligations, independent oversight, and explicit minority protections, the current governance model may deepen existing inequalities rather than democratise technological progress.