Scientists develop real-life invisibility cloak, Hides you from object detectors

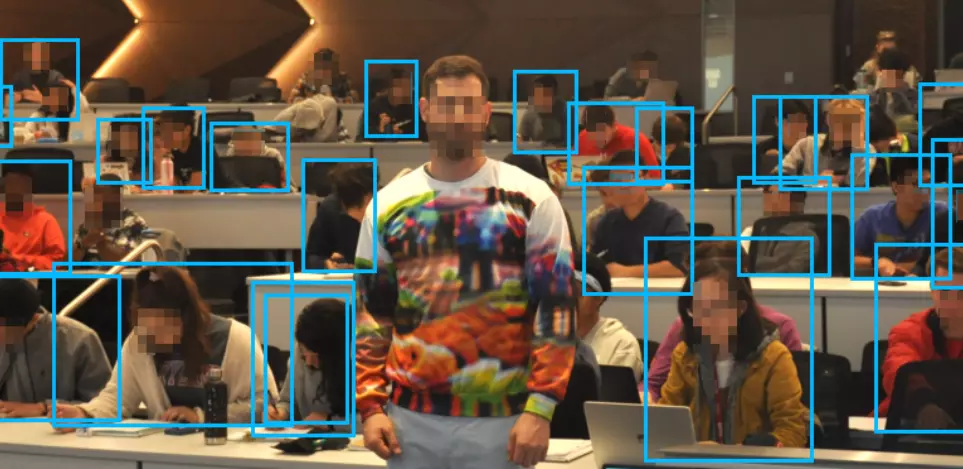

text_fieldsThe invisibility cloak made popular by the Harry Potter book series may come true partially. Scientists at the University of Maryland have developed a colourful sweater that hides you from most common object detectors like cameras.

The adversarial patterns on the sweater will evade AI models that detect people. Technically, it is an invisibility cloak against AI. Developers were trying to test machine learning systems for vulnerabilities. But ended up creating a print that AI cameras cannot see, reported Gagadget.

However, the camera is still spotting the person wearing the sweater but cannot recognise the individual with 100% certainty. According to a report in Hackster, the sweater has only a 50% success rate in the wearable test.

A paper published on the university's website explained the nature of the sweater. The team wrote: "It features a stay-dry microfleece lining, a modern fit, and an adversarial pattern that evades the most common object detectors. In our demonstration, the YOLOv2 detector is evaded using a pattern trained on the COCO dataset with a carefully constructed objective."

Researchers used the SOCO data set on which the algorithm YOLOv2 is trained. The team managed to identify the pattern that helps the algorithm to recognise a person. An opposite pattern to this was developed by scientists which they transformed into an print for clothes.

"Most work on real-world adversarial attacks has focused on classifiers, which assign a holistic label to an entire image, rather than detectors which localise objects within an image. Detectors work by considering thousands of 'priors' (potential bounding boxes) within the image with different locations, sizes, and aspect ratios. To fool an object detector, an adversarial example must fool every prior in the image, which is much more difficult than fooling the single output of a classifier," explained the team.